Cloudflare + Backblaze + PicList 搭建博客图床(无需自有域名)

(在火车上无聊于是开始写博客,我这精神真是感天动地…)

过去我用 Github 的仓库当图床,配合 PicGo 自动上传,但一来 GitHub 的仓库和单个文件都有大小限制,二来我觉得这多少是对 Github 的滥用,最近终于完成了新的图床配置并且运行良好,在这里记录下(写到后面太烦了开始中英夹杂,只求自己看懂就行,请见谅)。

在一众 object storage 服务商中(Cloudflare、Wasabi、AWS、Scaleway、IBM Cloud Object Storage、Azure、Oracle),Backblaze 是唯一不添加付款信息就可使用的服务,只是有限制条件,只能创建 private bucket。也可以付费 $1 进行验证后创建 public bucket。一美金不是问题,但我不想在还不够了解运作流程的情况下贸然添加支付信息,而且这个网络账号所关联的信息我会希望尽可能和现实身份隔离。

其他几个可参考的教程:

- Deliver Private Backblaze B2 Content Through Cloudflare CDN

- Cloudflare + Backblaze 实现免费的博客图床方案 - Leo’s blog

- Blog 图床方案:Backblaze B2 (私密桶) + Cloudflare Workers + PicGo | Standat’s Blog

我没有自己的域名,以上步骤对我不太适用,worker 部分全是根据自己需求写的,这篇只是过程,不是教程,请酌情参考。

Backblaze

Backblaze free account plan:

Backblaze B2 has a free account with 10GB of storage. The free account includes 1GB of daily download bandwidth, 2,500 class B transactions connected to downloads and 2,500 class C transactions.

对于博客图床来说是够用了。

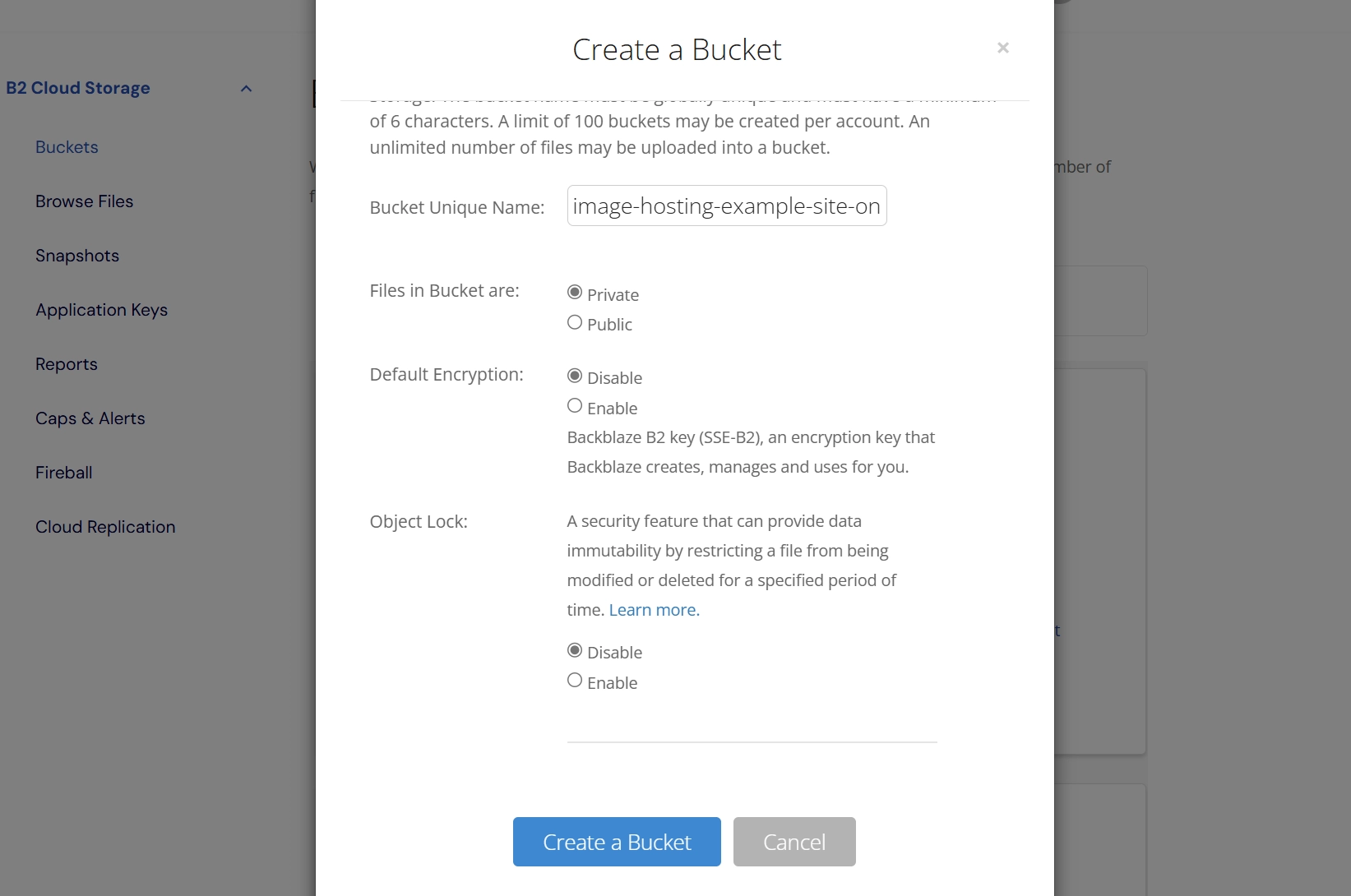

创建 bucket

在 官网 注册账号,新建 private bucket,命名(尽可能复杂)。

在Bucket Settings 中的 Bucket Info 内添加:{"cache-control": "public, max-age=86400"},也可将数字调得更大,86400 是缓存一天。

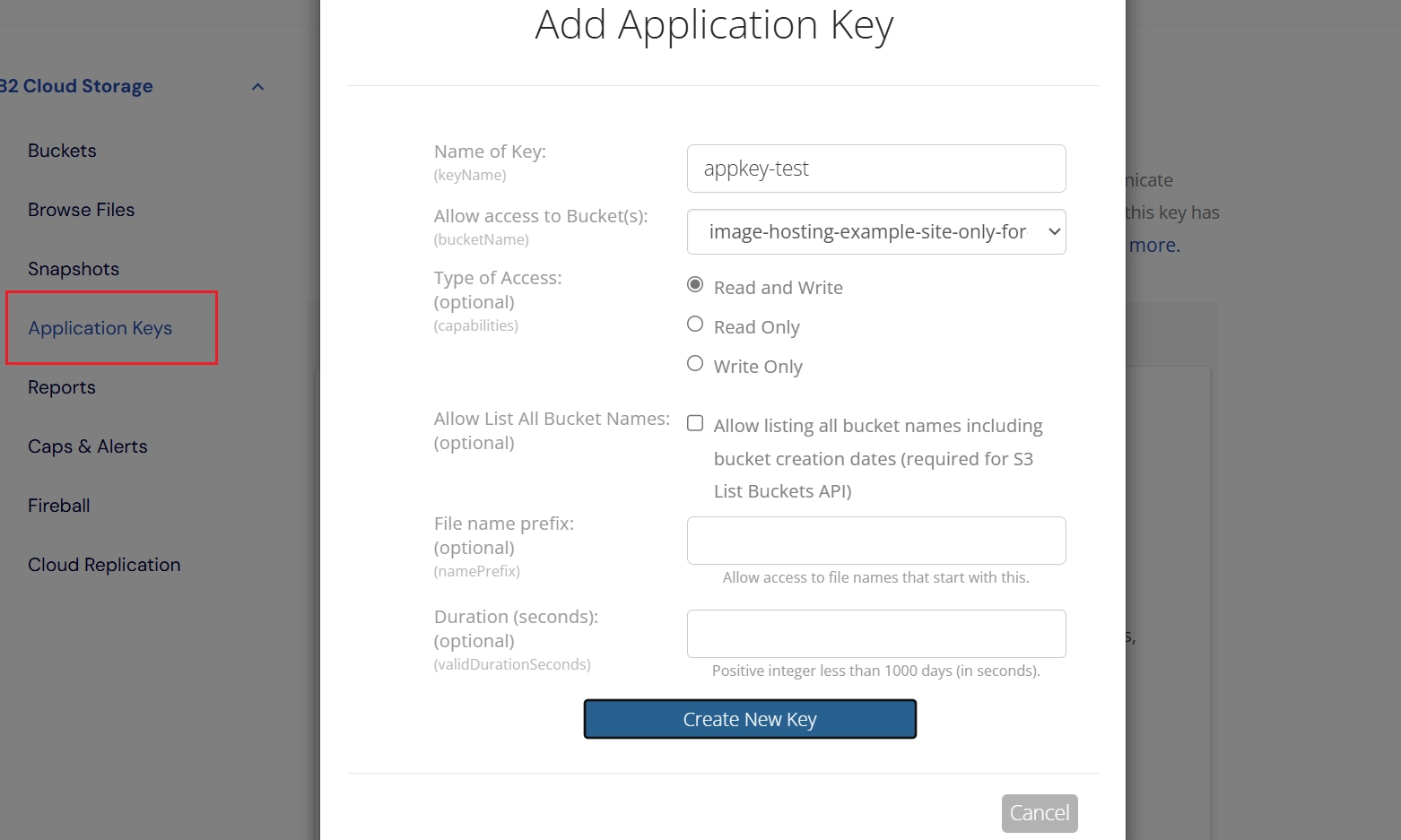

创建 application key

新建两个 application key。我是为了将 PicList 上传和 Cloudflare 访问区分开,前者的权限是 Read and Write,后者则只是 Read Only。不介意的话也可以只建一个,但权限就需要设置成 Read and Write。

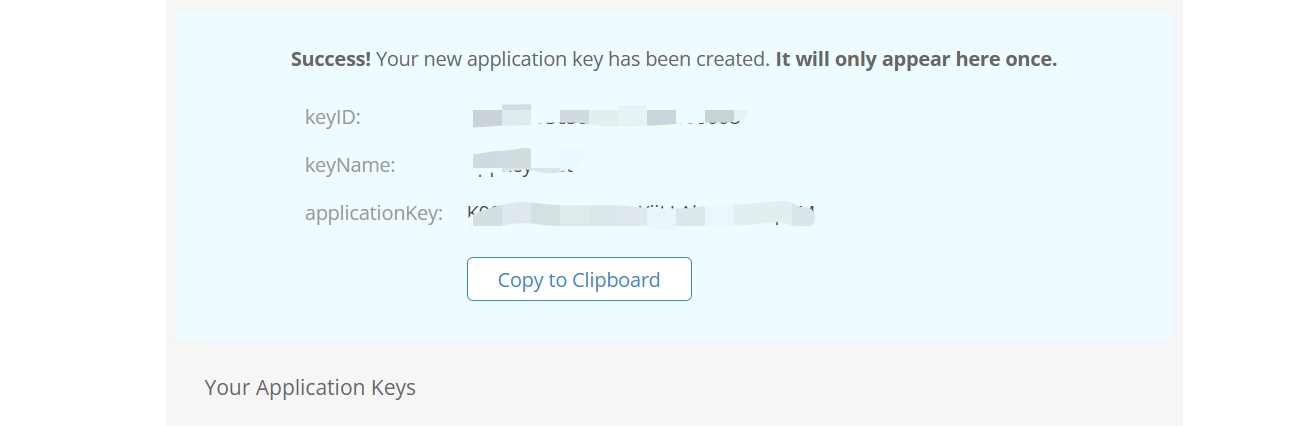

接下来这个页面只会出现一次,复制下 keyID 、keyName、applicationKey 的值。

Cloudflare

创建 worker

复制以下代码。

export default {

async fetch(request, env) {

const API_KEY_ID = env.API_KEY_ID; // Application Key ID

const API_KEY = env.API_KEY; // Application Key

const BUCKET_NAME = env.BUCKET_NAME; // Bucket Name

const KV_NAMESPACE = env.KV_NAMESPACE; // KV namespace for rate limiting

const ALLOWED_REFERERS = ["https://site1/",

"https://site2/",

"https://site3/",

"https://localhost:1313/"

]; // List of allowed referers

const MAX_REQUESTS_PER_DAY = 1000; // Limit to 1000 requests per day

const url = new URL(request.url);

const filePath = url.pathname.slice(1); // Extract file path from URL

if (!filePath) {

return new Response("File path not provided", { status: 400 });

}

// Step 1: Validate Referer Header

const referer = request.headers.get("Referer");

const isAllowedReferer =

!referer || ALLOWED_REFERERS.some((allowed) => referer.includes(allowed));

if (!isAllowedReferer) {

return new Response("Invalid referer", { status: 403 });

}

// Access Cloudflare Cache API

const cache = caches.default;

// Check if the response is already in cache

const cachedResponse = await cache.match(request);

if (cachedResponse) {

console.log(`Cache hit for: ${filePath}`);

return cachedResponse; // Serve from cache

}

// Step 2: Rate Limiting using KV

const currentDate = new Date().toISOString().split("T")[0]; // YYYY-MM-DD

const requestCountKey = `${currentDate}:${filePath}`;

let requestCount = (await KV_NAMESPACE.get(requestCountKey)) || 0;

if (requestCount >= MAX_REQUESTS_PER_DAY) {

return new Response("Daily limit reached for this file", { status: 429 });

}

requestCount = parseInt(requestCount) + 1;

await KV_NAMESPACE.put(requestCountKey, requestCount, { expirationTtl: 86400 }); // Reset every 24 hours

console.log(`Request count for ${filePath}: ${requestCount}`);

console.log(`Cache miss for: ${filePath}. Fetching from Backblaze.`);

// Step 3: Authenticate with Backblaze

const authResponse = await fetch("https://api.backblazeb2.com/b2api/v2/b2_authorize_account", {

headers: {

Authorization: `Basic ${btoa(`${API_KEY_ID}:${API_KEY}`)}`,

},

});

const authResponseText = await authResponse.text();

if (!authResponse.ok) {

return new Response(`Authorization failed: ${authResponseText}`, { status: 500 });

}

const authData = JSON.parse(authResponseText);

const authToken = authData.authorizationToken;

const downloadUrl = authData.downloadUrl; // Base URL for the bucket

// Step 4: Construct the file URL

const b2FileUrl = `${downloadUrl}/file/${BUCKET_NAME}/${filePath}`;

// Step 5: Fetch the file from Backblaze

const fileResponse = await fetch(b2FileUrl, {

headers: {

Authorization: authToken,

},

});

if (!fileResponse.ok) {

const errorText = await fileResponse.text();

return new Response(`Failed to fetch file from Backblaze: ${errorText}`, { status: 404 });

}

// Clone the response to store in cache

const responseToCache = new Response(fileResponse.body, {

headers: {

"Content-Type": fileResponse.headers.get("Content-Type"),

"Cache-Control": "public, max-age=31536000", // Cache for 1 year

},

});

// Store the response in cache

await cache.put(request, responseToCache.clone());

// Return the fetched response to the client

return responseToCache;

},

};

几个自定义的值:

ALLOWED_REFERERS: 允许引用该图片地址的网站MAX_REQUESTS_PER_DAY:cloudflare 每日最多授权访问 backblaze 的次数max-age:cloudflare 缓存时间,我直接设置成了一年。

这个 worker 的作用是使用 application key 访问 private bucket 获取图片地址缓存到 cloudflare 并生成一个公开可见的 cloudflare worker 地址。加上 ALLOWED_REFERERS 是避免外站引用,毕竟我的免费账户流量有限,同时在没有 header 的情况下在我的 Obsidian 里可以预览。

另外绑定 KV Namespace 设置 Cloudflare 每日可对 Backblaze 发出 request 次数,这是为了防止 class c transition 超标,之前有一天很莫名地 cloudflare 对 backblaze 请求 authorize 2000 多次,所以我加上这个以防万一。

创建 KV Namespace

写不动了看 ChatGPT 写的吧。

Create a KV Namespace

- Go to your Cloudflare dashboard.

- Navigate to **Workers** > **KV** > **Create a Namespace**.

- Give your namespace a name (e.g., `daily-usage-tracker`).

Bind the Namespace to Your Worker

- After creating the namespace, you'll need to bind it to your Worker.

- Go to your Worker script in the Cloudflare dashboard.

- Under the **Settings** tab, find the **KV Namespace Bindings** section.

- Add a binding by giving it a name (e.g., `KV_NAMESPACE`) and selecting the namespace you created earlier.

Use the Namespace in Your Script

- The binding (`KV_NAMESPACE`) will be available in the `env` object passed to your Worker script.

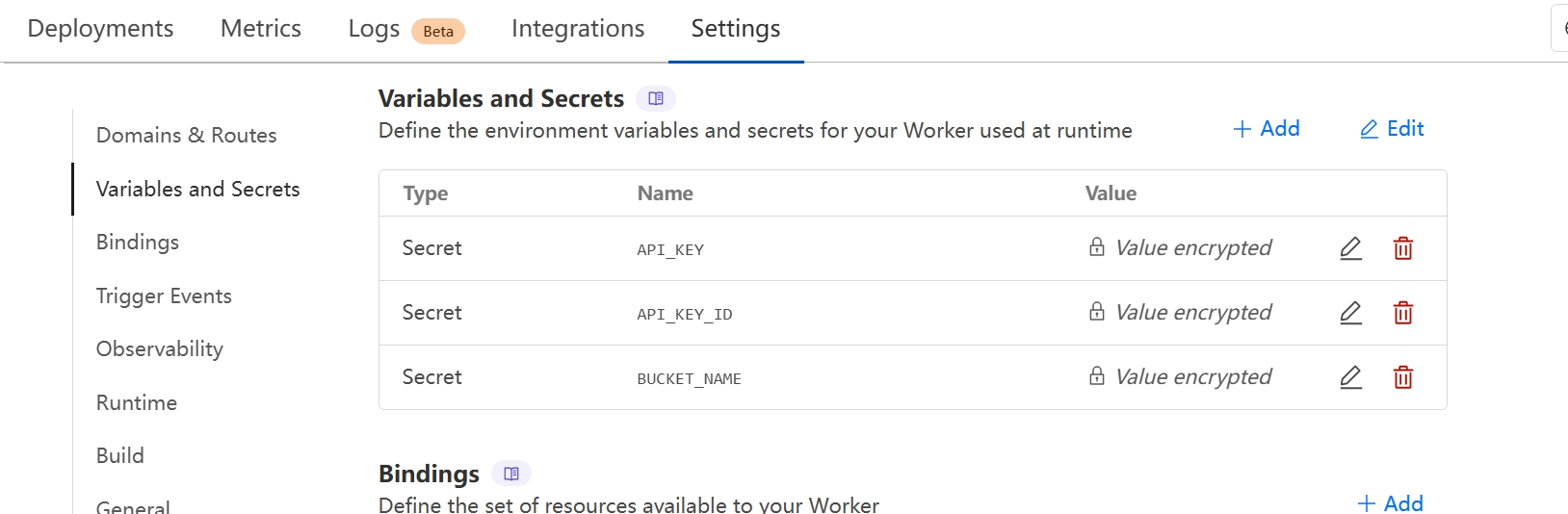

创建 secrets

在 worker 设置里新建以下 secrets:

API_KEY_IDAPI_KEYBUCKET_NAME

值是上面用于 cloudflare 的 read only 的application key 对应内容。

Piclist

PicGo 也支持 S3 图床但需要另外安装插件,而不知是不是这个项目久不维护了,安装插件频繁报错,之前我装 exif remover 时就费了很大功夫,重装 Npm packages,设置 npm proxy 等等等等,而 PicList 内置了这些功能,索性直接迁移了。

下载了 PicList Windows exe 程序又安装不上,微软什么毛病,头大,只能用命令行安装,那又先得安装 scope。

Install scope

Open a PowerShell terminal (version 5.1 or later) and from the PS C:> prompt, run:

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

Invoke-RestMethod -Uri https://get.scoop.sh | Invoke-Expression

How to open PowerShell from PS C:> prompt

Press Windows + R, type powershell, and hit Enter.

cd C:\

Install Piclist

scoop bucket add lemon https://github.com/hoilc/scoop-lemon

scoop install lemon/piclist

Import Picgo config

PicGo 设置可以一键导入,如果已经在那边用上了 S3 或其他图床就无需再配置。

Config s3 account

来设置 PicList 里的 S3 图床,要填的是以下几栏。

- AccessKeyID:

- SecretAccessKey:

- Bucket: bucket name

- Self Endpoint:

https://{endpoint} - Set ACL:private

- Self Custom URL:cloudflare worker 地址

Obsidian

Obsidian 里的 Image auto upload 插件对 PicGo 和PicList 都适用,以前安装过就不用再变动了。

具体我的图片上传流程可见 我的博客写作流程 。

Comments